Projects

Our projects span various domains of AI and machine learning, from developing novel speculative decoding systems for efficient LLM inference to creating innovative solutions for distributed computing and edge AI. Each project combines rigorous research with practical applications, addressing real-world challenges in modern AI systems.

Below you’ll find our featured projects and ongoing research initiatives that demonstrate our commitment to advancing the state-of-the-art in intelligent systems.

Featured

A research project on enhancing the robustness of wireless semantic communication using large AI models and Wasserstein distributionally robust optimization.

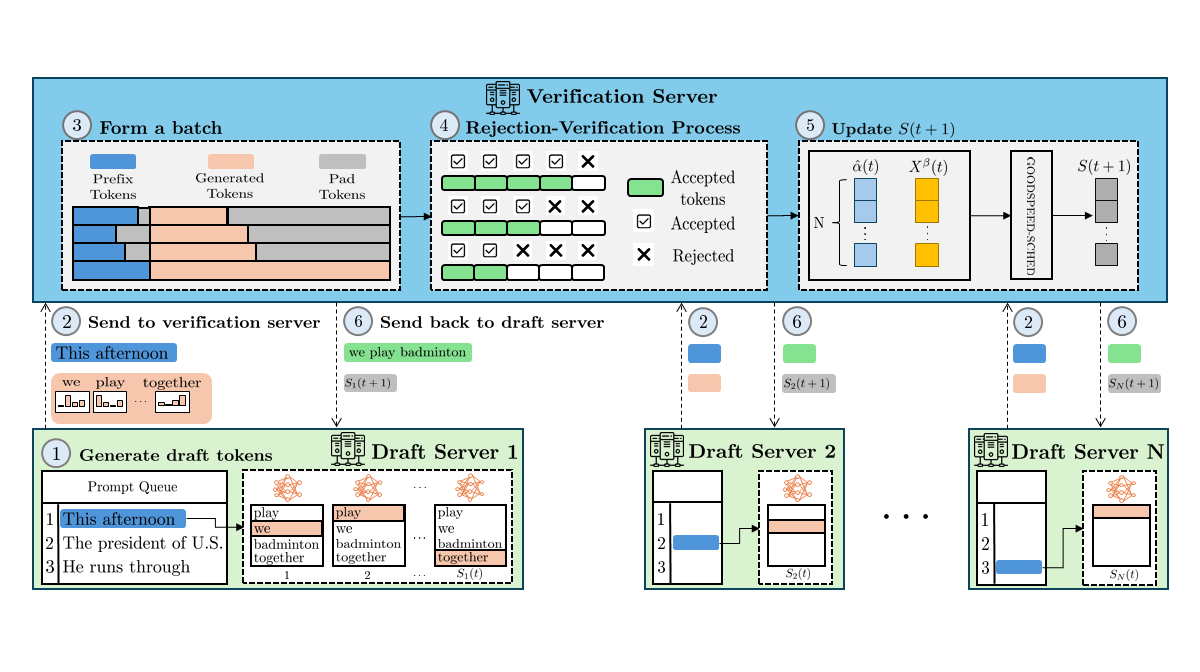

A research project exploring speculative decoding techniques for efficient LLM inference in distributed edge environments. Currently in development - focusing on fair resource allocation and optimization strategies for edge computing scenarios.